Machine Learning (ML) is a branch of Artificial Intelligence (AI) that enables computer systems to learn from data without being explicitly programmed. The goal of ML is to develop algorithms and models that can automatically identify patterns and make predictions or decisions from data.

In general, there are three main types of ML: supervised learning, unsupervised learning, and reinforcement learning.

Supervised learning is the process of training a model on a labeled dataset, where the desired output (label) is provided for each input. Common tasks include classification and regression. Unsupervised learning is the process of training a model on an unlabeled dataset, where the goal is to find patterns or structure in the data. Common tasks include clustering and dimensionality reduction. Reinforcement learning is a type of ML where an agent learns to make decisions by interacting with an environment and receiving feedback in the form of rewards or penalties. There are various techniques and algorithms used in ML, such as:

Decision Trees: A decision tree is a flowchart-like tree structure, where an internal node represents a feature(or attribute), the branch represents a decision rule, and each leaf node represents the outcome. Support Vector Machines (SVMs): A supervised learning algorithm that can be used for classification or regression tasks. Neural Networks: A set of algorithms, modeled loosely after the human brain, that are designed to recognize patterns. Bayesian Networks: A probabilistic graphical model that represents a set of variables and their conditional dependencies via a directed acyclic graph. Genetic Algorithm: A search heuristic that is inspired by the process of natural selection. To get started with ML, it's important to have a strong understanding of linear algebra, calculus, and probability. Additionally, many popular platforms and libraries, such as TensorFlow, PyTorch, and scikit-learn, make it easier to implement ML algorithms and models.

Understanding the problem: This includes identifying the inputs, outputs, and constraints of the problem. Choosing a model: This includes selecting a model architecture and hyperparameters that are appropriate for the problem and dataset. Choosing a loss function: This includes selecting a function that measures the difference between the model's predictions and the true outputs. Choosing an optimizer: This includes selecting an algorithm that updates the model's parameters to minimize the loss function. Choosing a metric: This includes selecting a function that measures the performance of the model on a held-out dataset. Choosing a dataset: This includes selecting a dataset that is appropriate for the problem and the model. Machine learning is an iterative process, and the model is typically trained multiple times using different combinations of hyperparameters, architectures, and datasets. The goal is to find the best set of parameters that result in the best performance on the held-out dataset.

Overfitting and underfitting are common issues that arise during the learning process. Overfitting occurs when the model is too complex and memorizes the training data instead of generalizing to new data. Underfitting occurs when the model is too simple and is not able to capture the underlying patterns in the data.

To prevent overfitting, techniques such as regularization and early stopping can be used. To prevent underfitting, techniques such as increasing the model complexity or increasing the size of the dataset can be used.

Please note that the above information is just a brief overview of the topic, and you should consult your course materials and instructors for a more comprehensive understanding of learning in the context of machine learning techniques.

Supervised Learning is a type of machine learning in which a model or algorithm is trained on a labeled dataset, and then used to make predictions or take actions in new, unseen situations. Here are some notes on Supervised Learning with reference to Machine Learning Techniques:

Definition: Supervised learning is a process where a model learns from labeled training data, which includes input-output pairs, and makes predictions on unseen data. The goal is to learn a function that maps inputs to outputs.

Types of Supervised Learning:

Classification: The goal is to predict a categorical label (e.g. spam or not spam) based on the input data. Common algorithms include logistic regression, decision trees, and support vector machines.

Regression: The goal is to predict a continuous value (e.g. price of a stock) based on the input data. Common algorithms include linear regression and polynomial regression.

Training and Testing: In supervised learning, the training data is used to train the model and the testing data is used to evaluate its performance. The testing data should be independent of the training data to avoid overfitting.

Evaluation Metrics: The performance of a supervised learning model is typically evaluated using metrics such as accuracy, precision, recall, and F1 score (for classification) or mean squared error (for regression).

Applications: Supervised learning algorithms are widely used in a variety of applications, including natural language processing, computer vision, speech recognition, and bioinformatics.

Limitations: Supervised learning algorithms can be limited by the quality and representativeness of the training data, and may not be able to generalize well to new, unseen data. Additionally, they require labeled data to train the model which is not always available and it can be time-consuming and expensive to label data.

Supervised Learning is a fundamental concept in the field of Machine Learning Techniques, and is widely used in a variety of applications. A comprehensive understanding of Supervised Learning includes not only the basic concepts and definitions but also the underlying algorithms, techniques, and best practices used to implement it.

Definition: Supervised learning is a process where a model learns from labeled training data, which includes input-output pairs, and makes predictions on unseen data. The goal is to learn a function that maps inputs to outputs. The model is trained on a labeled dataset, where each example has an input and an associated output. Once the model is trained, it can be used to make predictions on new, unseen data.

Types of Supervised Learning: There are two main types of supervised learning: classification and regression.

Classification: The goal is to predict a categorical label (e.g. spam or not spam) based on the input data. Common algorithms for classification include logistic regression, decision trees, k-nearest neighbors, and support vector machines. Regression: The goal is to predict a continuous value (e.g. price of a stock) based on the input data. Common algorithms for regression include linear regression, polynomial regression, and decision tree regression. Training and Testing: In supervised learning, the training data is used to train the model and the testing data is used to evaluate its performance. The testing data should be independent of the training data to avoid overfitting. A common practice is to split the data into a training set and a test set, where the model is trained on the training set and evaluated on the test set.

Evaluation Metrics: The performance of a supervised learning model is typically evaluated using metrics such as accuracy, precision, recall, and F1 score (for classification) or mean squared error (for regression). These metrics allow us to quantitatively measure how well the model is performing and compare the performance of different models. Other evaluation metrics like ROC-AUC, Log-loss, etc can also be used depending on the type of problem.

Feature Selection and Engineering: Supervised learning models are sensitive to the features of the input data, and the performance of the model can be greatly improved by selecting relevant features and transforming the input data in a way that is more informative. Feature selection techniques like Recursive Feature Elimination, Correlation-based Feature Selection, etc can be used to select the most relevant features and feature engineering techniques like PCA, LDA, etc can be used to transform the input data.

Hyperparameter tuning: Supervised learning models often have a number of parameters that must be set before training. These are called hyperparameters and their optimal values are determined by tuning them on a validation set. Common techniques for hyperparameter tuning include grid search, random search and Bayesian optimization.

Bias-variance trade-off: Supervised learning models can suffer from either high bias or high variance, and it is important to understand the bias-variance trade-off to select the best model. High bias models are simple and tend to underfit the data, while high variance models are complex and tend to overfit the data. Techniques like k-fold cross-validation, regularization, and early stopping can be used to balance bias and variance and improve the performance of the model.

Ensemble Methods: Ensemble methods are used to combine the predictions of multiple models to improve the overall performance. Common ensemble methods include bagging, boosting and stacking. Bagging method like Random Forest and Boosting method like Adaboost are widely used in supervised learning.

Applications: Supervised learning algorithms are widely used in a variety of applications, including natural language processing, computer vision, speech recognition, bioinformatics, financial forecasting, and customer churn prediction.

Limitations: Supervised learning algorithms can be limited by the quality and representativeness of the training data, and may not be able to generalize well to new, unseen data. Additionally, they require labeled data to train the model which is not always available and it can be time-consuming and expensive to label data.

In conclusion, a comprehensive understanding of Supervised Learning with reference to Machine Learning Techniques requires knowledge of the basic concepts, types of learning, underlying algorithms, techniques, and best practices used to implement it, as well as an understanding of its limitations and applications. It's important to keep in mind that this is an active area of research and new techniques and algorithms are being developed all the time.

Unsupervised Learning is a type of machine learning in which a model or algorithm is trained on an unlabeled dataset, and then used to identify patterns or structure in the data. Here are some notes on Unsupervised Learning with reference to Machine Learning Techniques:

Definition: Unsupervised learning is a process where a model learns from unlabeled data, and tries to identify patterns or structure in the data. The goal is to discover hidden structure in the data, rather than making predictions.

Types of Unsupervised Learning: There are several types of unsupervised learning, including clustering, dimensionality reduction, and anomaly detection.

Clustering: The goal is to group similar data points together. Common algorithms include k-means, hierarchical clustering, and DBSCAN.

Dimensionality Reduction: The goal is to reduce the number of features in the data while preserving as much information as possible. Common algorithms include PCA, LLE, and t-SNE.

Anomaly Detection: The goal is to identify data points that do not conform to the general pattern of the data. Common algorithms include One-class SVM and Isolation Forest.

Evaluation Metrics: Evaluating the performance of unsupervised learning models can be challenging, as there is no clear criterion for success. Common metrics include silhouette score, Davies-Bouldin index, and Calinski-Harabasz index for Clustering.

Applications: Unsupervised learning algorithms are widely used in a variety of applications, including image compression, market segmentation, and anomaly detection in financial and healthcare industries.

Limitations: Unsupervised learning algorithms can be limited by the quality and representativeness of the data, and may not be able to identify all the underlying patterns or structure in the data. Additionally, it may not be clear what the output of the model represents and how to interpret the results.

In conclusion, a comprehensive understanding of Unsupervised Learning with reference to Machine Learning Techniques requires knowledge of the basic concepts, types of learning, underlying algorithms, techniques, and best practices used to implement it, as well as an understanding of its limitations and applications. It's important to keep in mind that this is an active area of research and new techniques and algorithms are being developed all the time.

Reinforcement Learning is a type of machine learning in which a model or algorithm is trained on an unlabeled dataset, and then used to make predictions or take actions in new, unseen situations. Here are some notes on Reinforcement Learning with reference to Machine Learning Techniques:

Definition: Reinforcement learning is a process where a model learns from feedback from the environment, in the form of rewards or penalties, and makes predictions or takes actions on unseen data. The goal is to learn a policy that maximizes the cumulative reward over time.

Types of Reinforcement Learning: There are two main types of reinforcement learning: policy-based and value-based.

Policy-Based: The goal is to learn a mapping between states and actions, called a policy, which determines the action that should be taken in each state. Common algorithms include policy gradient and Q-learning.

Value-Based: The goal is to learn a mapping between states and values, called a value function, which estimates the value of being in each state. Common algorithms include policy iteration and value iteration.

Training and Testing: In reinforcement learning, the training data is used to train the model and the testing data is used to evaluate its performance. The testing data should be independent of the training data to avoid overfitting.

Evaluation Metrics: The performance of a reinforcement learning model is typically evaluated using metrics such as cumulative reward and average reward per episode.

Applications:

Limitations:

A well-defined learning problem is a crucial aspect of machine learning. It is important to define the problem clearly before attempting to solve it with machine learning techniques. A well-defined learning problem includes a clear statement of the task, input data, output data and performance measure. Here are some notes on well-defined learning problems with reference to Machine Learning Techniques:

Task: The task should be defined clearly and in a specific way. For example, "predicting the price of a stock" is a more specific task than "predicting the stock market."

Input Data: The input data should be clearly defined and understood. It should include the type of data, format, and any preprocessing that needs to be done.

Output Data: The output data should be clearly defined and understood. It should include the type of data, format, and any post-processing that needs to be done.

Performance Measure: The performance measure should be clearly defined and understood. It should include the evaluation metric that will be used to measure the performance of the model.

In Machine Learning Techniques, a well-defined learning problem is a problem where the input, output, and desired behavior of the model are clearly specified. A well-defined learning problem is essential for the successful implementation of a machine learning model. Here are some notes on well-defined learning problems with reference to Machine Learning Techniques:

Definition: A well-defined learning problem is a problem where the input, output, and desired behavior of the model are clearly specified. This includes the type of input, the type of output, and the performance criteria for the model.

Examples:

A supervised learning problem where the input is an image and the output is a label indicating whether the image contains a dog or a cat. A supervised learning problem where the input is a customer's historical data and the output is a prediction of whether the customer will churn. A unsupervised learning problem where the input is a set of market transactions and the goal is to find patterns or clusters in the data. Mnemonics: To remember the importance of well-defined learning problems, one can use the mnemonic "CLEAR"

C stands for "clearly defined inputs and outputs" L stands for "learning goal is defined" E stands for "evaluation metric is defined" A stands for "algorithms are chosen based on the problem" R stands for "real-world scenario" Real-world Scenario: In real-world scenarios, a well-defined learning problem is crucial for the successful implementation of machine learning models. For example, in the healthcare industry, a well-defined learning problem would be to predict the likelihood of a patient developing a certain disease based on their medical history and test results. The input would be the patient's medical history and test results, the output would be a probability of the patient developing the disease, and the performance criteria would be the accuracy of the predictions. With a well-defined learning problem, appropriate algorithms can be chosen, and the model can be evaluated using the chosen metric.

In conclusion, a well-defined learning problem is essential for the successful implementation of machine learning models. It allows for the clear specification of inputs, outputs, and desired behavior, which in turn enables the selection of appropriate algorithms and the evaluation of model performance.

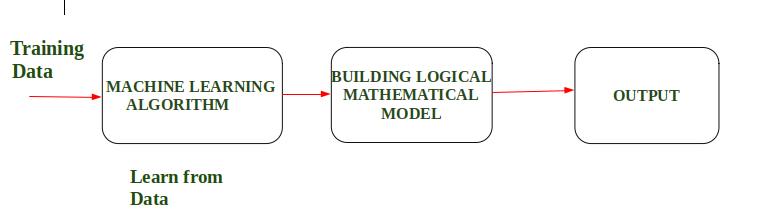

According to Arthur Samuel “Machine Learning enables a Machine to Automatically learn from Data, Improve performance from an Experience and predict things without explicitly programmed.”

In Simple Words, When we fed the Training Data to Machine Learning Algorithm, this algorithm will produce a mathematical model and with the help of the mathematical model, the machine will make a prediction and take a decision without being explicitly programmed. Also, during training data, the more machine will work with it the more it will get experience and the more efficient result is produced.

Example : In Driverless Car, the training data is fed to Algorithm like how to Drive Car in Highway, Busy and Narrow Street with factors like speed limit, parking, stop at signal etc. After that, a Logical and Mathematical model is created on the basis of that and after that, the car will work according to the logical model. Also, the more data the data is fed the more efficient output is produced.

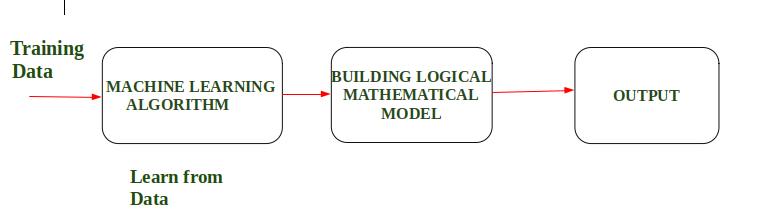

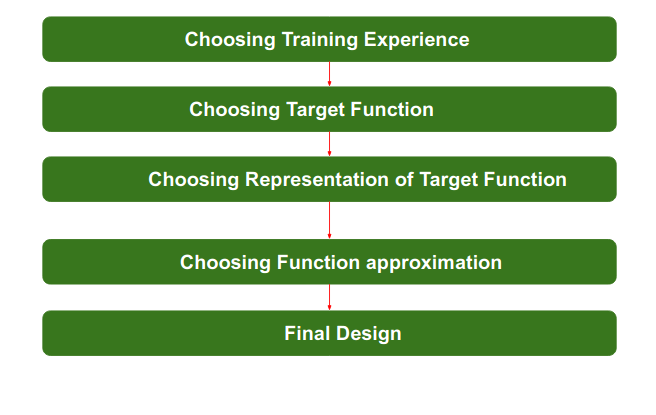

Designing a Learning System in Machine Learning :

According to Tom Mitchell, “A computer program is said to be learning from experience (E), with respect to some task (T). Thus, the performance measure (P) is the performance at task T, which is measured by P, and it improves with experience E.”

Example: In Spam E-Mail detection,

Task, T: To classify mails into Spam or Not Spam. Performance measure, P: Total percent of mails being correctly classified as being “Spam” or “Not Spam”. Experience, E: Set of Mails with label “Spam” Steps for Designing Learning System are:

Step 1) Choosing the Training Experience: The very important and first task is to choose the training data or training experience which will be fed to the Machine Learning Algorithm. It is important to note that the data or experience that we fed to the algorithm must have a significant impact on the Success or Failure of the Model. So Training data or experience should be chosen wisely.

Below are the attributes which will impact on Success and Failure of Data:

The training experience will be able to provide direct or indirect feedback regarding choices. For example: While Playing chess the training data will provide feedback to itself like instead of this move if this is chosen the chances of success increases. Second important attribute is the degree to which the learner will control the sequences of training examples. For example: when training data is fed to the machine then at that time accuracy is very less but when it gains experience while playing again and again with itself or opponent the machine algorithm will get feedback and control the chess game accordingly. Third important attribute is how it will represent the distribution of examples over which performance will be measured. For example, a Machine learning algorithm will get experience while going through a number of different cases and different examples. Thus, Machine Learning Algorithm will get more and more experience by passing through more and more examples and hence its performance will increase. Step 2- Choosing target function: The next important step is choosing the target function. It means according to the knowledge fed to the algorithm the machine learning will choose NextMove function which will describe what type of legal moves should be taken. For example : While playing chess with the opponent, when opponent will play then the machine learning algorithm will decide what be the number of possible legal moves taken in order to get success.

Step 3- Choosing Representation for Target function: When the machine algorithm will know all the possible legal moves the next step is to choose the optimized move using any representation i.e. using linear Equations, Hierarchical Graph Representation, Tabular form etc. The NextMove function will move the Target move like out of these move which will provide more success rate. For Example : while playing chess machine have 4 possible moves, so the machine will choose that optimized move which will provide success to it.

Step 4- Choosing Function Approximation Algorithm: An optimized move cannot be chosen just with the training data. The training data had to go through with set of example and through these examples the training data will approximates which steps are chosen and after that machine will provide feedback on it. For Example : When a training data of Playing chess is fed to algorithm so at that time it is not machine algorithm will fail or get success and again from that failure or success it will measure while next move what step should be chosen and what is its success rate.

Step 5- Final Design: The final design is created at last when system goes from number of examples , failures and success , correct and incorrect decision and what will be the next step etc. Example: DeepBlue is an intelligent computer which is ML-based won chess game against the chess expert Garry Kasparov, and it became the first computer which had beaten a human chess expert.

It’s all well and good to ask if androids dream of electric sheep, but science fact has evolved to a point where it’s beginning to coincide with science fiction. No, we don’t have autonomous androids struggling with existential crises — yet — but we are getting ever closer to what people tend to call “artificial intelligence.”

Machine Learning is a sub-set of artificial intelligence where computer algorithms are used to autonomously learn from data and information. In machine learning computers don’t have to be explicitly programmed but can change and improve their algorithms by themselves.

Today, machine learning algorithms enable computers to communicate with humans, autonomously drive cars, write and publish sport match reports, and find terrorist suspects. I firmly believe machine learning will severely impact most industries and the jobs within them, which is why every manager should have at least some grasp of what machine learning is and how it is evolving.

In this post I offer a quick trip through time to examine the origins of machine learning as well as the most recent milestones.

1950 — Alan Turing creates the “Turing Test” to determine if a computer has real intelligence. To pass the test, a computer must be able to fool a human into believing it is also human.

1952 — Arthur Samuel wrote the first computer learning program. The program was the game of checkers, and the IBM computer improved at the game the more it played, studying which moves made up winning strategies and incorporating those moves into its program.

1957 — Frank Rosenblatt designed the first neural network for computers (the perceptron), which simulate the thought processes of the human brain.

1967 — The “nearest neighbor” algorithm was written, allowing computers to begin using very basic pattern recognition. This could be used to map a route for traveling salesmen, starting at a random city but ensuring they visit all cities during a short tour.

1979 — Students at Stanford University invent the “Stanford Cart” which can navigate obstacles in a room on its own.

1981 — Gerald Dejong introduces the concept of Explanation Based Learning (EBL), in which a computer analyses training data and creates a general rule it can follow by discarding unimportant data.

Machine Learning (source: Shutterstock) Machine Learning (source: Shutterstock) 1985 — Terry Sejnowski invents NetTalk, which learns to pronounce words the same way a baby does.

1990s — Work on machine learning shifts from a knowledge-driven approach to a data-driven approach. Scientists begin creating programs for computers to analyze large amounts of data and draw conclusions — or “learn” — from the results.

1997 — IBM’s Deep Blue beats the world champion at chess.

2006 — Geoffrey Hinton coins the term “deep learning” to explain new algorithms that let computers “see” and distinguish objects and text in images and videos.

2010 — The Microsoft Kinect can track 20 human features at a rate of 30 times per second, allowing people to interact with the computer via movements and gestures.

2011 — IBM’s Watson beats its human competitors at Jeopardy.

2011 — Google Brain is developed, and its deep neural network can learn to discover and categorize objects much the way a cat does.

2012 – Google’s X Lab develops a machine learning algorithm that is able to autonomously browse YouTube videos to identify the videos that contain cats.

2014 – Facebook develops DeepFace, a software algorithm that is able to recognize or verify individuals on photos to the same level as humans can.

2015 – Amazon launches its own machine learning platform.

2015 – Microsoft creates the Distributed Machine Learning Toolkit, which enables the efficient distribution of machine learning problems across multiple computers.

2015 – Over 3,000 AI and Robotics researchers, endorsed by Stephen Hawking, Elon Musk and Steve Wozniak (among many others), sign an open letter warning of the danger of autonomous weapons which select and engage targets without human intervention.

2016 – Google’s artificial intelligence algorithm beats a professional player at the Chinese board game Go, which is considered the world’s most complex board game and is many times harder than chess. The AlphaGo algorithm developed by Google DeepMind managed to win five games out of five in the Go competition.

So are we drawing closer to artificial intelligence? Some scientists believe that’s actually the wrong question.

They believe a computer will never “think” in the way that a human brain does, and that comparing the computational analysis and algorithms of a computer to the machinations of the human mind is like comparing apples and oranges.

Regardless, computers’ abilities to see, understand, and interact with the world around them is growing at a remarkable rate. And as the quantities of data we produce continue to grow exponentially, so will our computers’ ability to process and analyze — and learn from — that data grow and expand.

With the constant advancements in artificial intelligence, the field has become too big to specialize in all together. There are countless problems that we can solve with countless methods. Knowledge of an experienced AI researcher specialized in one field may mostly be useless for another field. Understanding the nature of different machine learning problems is very important. Even though the list of machine learning problems is very long and impossible to explain in a single post, we can group these problems into four different learning approaches:

Supervised Learning; Unsupervised Learning; Semi-supervised Learning; and Reinforcement Learning. Before we dive into each of these approaches, let’s start with what machine learning is:

What is Machine Learning? The term “Machine Learning” was first coined in 1959 by Arthur Samuel, an IBM scientist and pioneer in computer gaming and artificial intelligence. Machine learning is considered a sub-discipline under the field of artificial intelligence. It aims to automatically improve the performance of the computer algorithms designed for particular tasks using experience. In a machine learning study, the experience is derived from the training data, which may be defined as the sample data collected on previously recorded observations or live feedbacks. Through this experience, machine learning algorithms can learn and build mathematical models to make predictions and decisions.

The learning process starts by feeding training data (e.g., examples, direct experience, basic instructions) into the model. By using these data, models can find valuable patterns in the data very quickly. These patterns are -then- used to make predictions and decisions on relevant events. The learning may continue even after deployment if the developer builds a suitable machine learning system which allows continuous training.

Four Machine Learning Approaches Top machine learning approaches are categorized depending on the nature of their feedback mechanism for learning. Most of the machine learning problems may be addressed by adopting one of these approaches. Yet, we may still encounter complex machine learning solutions that do not fit into one of these approaches.

This categorization is essential because it will help you quickly uncover the nature of a problem you may encounter in the future, analyze your resources, and develop a suitable solution.

Let’s start with the supervised learning approach.

Supervised Learning Supervised learning is the machine learning task of learning a function that maps an input to an output based on example input-output pairs. It infers a function from labeled training data consisting of a set of training examples.

The supervised learning approach can be adopted when a dataset contains the records of the response variable values (or labels). Depending on the context, this data with labels is usually referred to as “labeled data” and “training data.”

Example 1: When we try to predict a person’s height using his weight, age, and gender, we need the training data that contains people’s weight, age, gender info along with their real heights. This data allows the machine learning algorithm to discover the relationship between height and the other variables. Then, using this knowledge, the model can predict the height of a given person.

Example 2: We can mark e-mails as ‘spam’ or ‘not-spam’ based on the differentiating features of the previously seen spam and not-spam e-mails, such as the lengths of the e-mails and use of particular keywords in the e-mails. Learning from training data continues until the machine learning model achieves a high level of accuracy on the training data.

There are two main supervised learning problems: (i) Classification Problems and (ii) Regression Problems.

Classification Problem In classification problems, the models learn to classify an observation based on their variable values. During the learning process, the model is exposed to a lot of observations with their labels. For example, after seeing thousands of customers with their shopping habits and gender information, a model may successfully predict the gender of a new customer based on his/her shopping habits. Binary classification is the term used for grouping under two labels, such as male and female. Another binary classification example might be predicting whether the animal in a picture is a ‘cat’ or ‘not cat,’ as shown in Figure 2–4.

On the other hand, multilabel classification is used when there are more than two labels. Identifying and predicting handwritten letters and numbers on an image would be an example of multilabel classification.

Regression Problems In regression problems, the goal is to calculate a value by taking advantage of the relationship between the other variables (i.e., independent variables, explanatory variables, or features) and the target variable (i.e., dependent variable, response variable, or label). The strength of the relationship between our target variable and the other variables is a critical determinant of the prediction value. Predicting how much a customer would spend based on its historical data is a regression problem.

Unsupervised Learning Unsupervised learning is a type of machine learning that looks for previously undetected patterns in a data set with no pre-existing labels and with a minimum of human supervision.

Unsupervised learning is a learning approach used in ML algorithms to draw inferences from datasets, which do not contain labels.

There are two main unsupervised learning problems: (i) Clustering and (ii) Dimensionality Reduction.

Clustering Unsupervised learning is mainly used in clustering analysis.

Clustering analysis is a grouping effort in which the members of a group (i.e., a cluster) are more similar to each other than the members of the other clusters.

There are many different clustering methods available. They usually utilize a type of similarity measure based on selected metrics such as Euclidean or probabilistic distance. Bioinformatic sequence analysis, genetic clustering, pattern mining, and object recognition are some of the clustering problems that may be tackled with the unsupervised learning approach.

Dimensionality Reduction Another use case of unsupervised learning is dimensionality reduction. Dimensionality is equivalent to the number of features used in a dataset. In some datasets, you may find hundreds of potential features stored in individual columns. In most of these datasets, several of these columns are highly correlated. Therefore, we should either select the best ones, i.e., feature selection, or extract new features combining the existing ones, i.e., feature extraction. This is where unsupervised learning comes into play. Dimensionality reduction methods help us create neater and cleaner models that are free of noise and unnecessary features.

Semi-Supervised Learning Semi-supervised learning is a machine learning approach that combines the characteristics of supervised learning and unsupervised learning. A semi-supervised learning approach is particularly useful when we have a small amount of labeled data with a large amount of unlabeled data available for training. Supervised learning characteristics help take advantage of the small amount of label data. In contrast, unsupervised learning characteristics are useful to take advantage of a large amount of unlabeled data.

Semi-supervised learning is an approach to machine learning that combines a small amount of labeled data with a large amount of unlabeled data during training.

Well, you might think that if there are useful real-life applications for semi-supervised learning. Although supervised learning is a powerful learning approach, labeling data -to be used in supervised learning- is a costly and time-consuming process. On the other hand, a sizeable data volume can also be beneficial even though they are not labeled. So, in real life, semi-supervised learning may shine out as the most suitable and the most fruitful ML approach if done correctly.

In semi-supervised learning, we usually start by clustering the unlabeled data. Then, we use the labeled data to label the clustered unlabeled data. Finally, a significant amount of now-labeled data is used to train machine learning models. Semi-supervised learning models can be very powerful since they can take advantage of a high volume of data.

Semi-supervised learning models are usually a combination of transformed and adjusted versions of the existing machine learning algorithms used in supervised and unsupervised learning. This approach is successfully used in areas like speech analysis, content classification, and protein sequence classification. The similarity of these fields is that they offer abundant unlabeled data and only a small amount of labeled data.

Reinforcement Learning Reinforcement learning is one of the primary approaches to machine learning concerned with finding optimal agent actions that maximize the reward within a particular environment. The agent learns to perfect its actions to gain the highest possible cumulative reward.

Reinforcement learning (RL) is an area of machine learning concerned with how software agents ought to take actions in an environment in order to maximize the notion of cumulative reward.

There are four main elements in reinforcement learning:

Agent: The trainable program which exercises the tasks assigned to it Environment: The real or virtual universe where the agent completes its tasks. Action: A move of the agent which results in a change of status in the environment Reward: A negative or positive remuneration based on the action. Reinforcement learning may be used in both the real world as well as in the virtual world:

Example 1: You may create an evolving ad placement system deciding how many ads to place on a website based on the ad revenue generated in different setups. The ad placement system would be an excellent example of real-world applications.

Example 2: On the other hand, you can train an agent in a video game with reinforcement learning to compete against other players, usually referred to as bots.

Example 3: Finally, virtual and real robots training in terms of their movements are done with the reinforcement learning approach.

Some of the popular reinforcement learning models may be listed as follows:

Q-Learning, State-Action-Reward-State-Action (SARSA), Deep Q Network (DQN), Deep Deterministic Policy Gradient (DDPG), One of the disadvantages of the popular deep learning frameworks is that they lack comprehensive module support for reinforcement learning, and TensorFlow and PyTorch are no exception. Deep reinforcement learning can only be done with extension libraries built on top of existing deep learning libraries such as Keras-RL, TF.Agents, and Tensorforce or dedicated reinforcement learning libraries such as Open AI Baselines and Stable Baselines.

Now that we covered all four approaches, here is a summary visual to make basic comparison among different ML approaches:

Final Notes The field of AI is expanding very quickly and becoming a major research field. As the field expands, sub-fields and sub-subfields of AI have started to appear. Although we cannot master the entire field, we can at least be informed about the major learning approach.

The purpose of this post was to make you acquainted with these four machine learning approaches. In the upcoming post, we will cover other AI essentials.

Artificial neural networks (ANNs), usually simply called neural networks (NNs) or neural nets, are computing systems inspired by the biological neural networks that constitute animal brains.

An ANN is based on a collection of connected units or nodes called artificial neurons, which loosely model the neurons in a biological brain. Each connection, like the synapses in a biological brain, can transmit a signal to other neurons. An artificial neuron receives signals then processes them and can signal neurons connected to it. The "signal" at a connection is a real number, and the output of each neuron is computed by some non-linear function of the sum of its inputs. The connections are called edges. Neurons and edges typically have a weight that adjusts as learning proceeds. The weight increases or decreases the strength of the signal at a connection. Neurons may have a threshold such that a signal is sent only if the aggregate signal crosses that threshold.

Typically, neurons are aggregated into layers. Different layers may perform different transformations on their inputs. Signals travel from the first layer (the input layer), to the last layer (the output layer), possibly after traversing the layers multiple times.

The original goal of neural networks was to model the brain, but they have also been used for statistical modeling and data mining. Neural networks are used in applications such as speech recognition, image recognition, medical diagnosis, and natural language processing. Neural networks are also used in reinforcement learning, a form of machine learning that allows software agents to automatically determine the ideal behavior within a specific context in order to maximize its performance.

Artificial Neuron is a mathematical model of a biological neuron. It is a mathematical function that takes a set of inputs, performs a calculation on them, and produces a single output. The output of the artificial neuron is a function of the inputs and the weights associated with the inputs. The artificial neuron is a basic unit of a neural network. It is a mathematical function that takes a set of inputs, performs a calculation on them, and produces a single output. The output of the artificial neuron is a function of the inputs and the weights associated with the inputs. The artificial neuron is a basic unit of a neural network.

Clustering is the task of grouping a set of objects in such a way that objects in the same group (called a cluster) are more similar (in some sense or another) to each other than to those in other groups (clusters). Clustering is a main task of exploratory data mining, and a common technique for statistical data analysis, used in many fields, including machine learning, pattern recognition, image analysis, information retrieval, bioinformatics, data compression, and computer graphics.

Clustering is a method of unsupervised learning, which is used when you have unlabeled data (i.e., data without defined categories or groups). The goal of clustering is to identify the inherent groupings in the data, such as grouping customers by purchasing behavior. Clustering is also used for exploratory data analysis to find hidden patterns or grouping in data.

In the field of machine learning, clustering is one of the most popular unsupervised learning methods. Clustering is used for exploratory data analysis to find hidden patterns or grouping in data. Clustering is also used for customer segmentation, recommender systems, image segmentation, semi-supervised learning, and dimensionality reduction.

The main goal of clustering is to group the data into distinct groups, such that the data points in the same group are similar to each other and dissimilar to the data points in other groups. There are several clustering algorithms, such as K-means, hierarchical clustering, DBSCAN, and Gaussian mixture models. The K-means algorithm is one of the most popular clustering algorithms and is used for a wide range of applications.

Types of clustering

Here we are discussing mainly popular Clustering algorithms that are widely used in machine learning:

K-Means algorithm: The k-means algorithm is one of the most popular clustering algorithms. It classifies the dataset by dividing the samples into different clusters of equal variances. The number of clusters must be specified in this algorithm. It is fast with fewer computations required, with the linear complexity of O(n). Mean-shift algorithm: Mean-shift algorithm tries to find the dense areas in the smooth density of data points. It is an example of a centroid-based model, that works on updating the candidates for centroid to be the center of the points within a given region. DBSCAN Algorithm: It stands for Density-Based Spatial Clustering of Applications with Noise. It is an example of a density-based model similar to the mean-shift, but with some remarkable advantages. In this algorithm, the areas of high density are separated by the areas of low density. Because of this, the clusters can be found in any arbitrary shape. Expectation-Maximization Clustering using GMM: This algorithm can be used as an alternative for the k-means algorithm or for those cases where K-means can be failed. In GMM, it is assumed that the data points are Gaussian distributed. Agglomerative Hierarchical algorithm: The Agglomerative hierarchical algorithm performs the bottom-up hierarchical clustering. In this, each data point is treated as a single cluster at the outset and then successively merged. The cluster hierarchy can be represented as a tree-structure. Affinity Propagation: It is different from other clustering algorithms as it does not require to specify the number of clusters. In this, each data point sends a message between the pair of data points until convergence. It has O(N2T) time complexity, which is the main drawback of this algorithm.

Applications of Clustering

Below are some commonly known applications of clustering technique in Machine Learning:

In Identification of Cancer Cells: The clustering algorithms are widely used for the identification of cancerous cells. It divides the cancerous and non-cancerous data sets into different groups. In Search Engines: Search engines also work on the clustering technique. The search result appears based on the closest object to the search query. It does it by grouping similar data objects in one group that is far from the other dissimilar objects. The accurate result of a query depends on the quality of the clustering algorithm used. Customer Segmentation: It is used in market research to segment the customers based on their choice and preferences. In Biology: It is used in the biology stream to classify different species of plants and animals using the image recognition technique. In Land Use: The clustering technique is used in identifying the area of similar lands use in the GIS database. This can be very useful to find that for what purpose the particular land should be used, that means for which purpose it is more suitable.

Reinforcement learning (RL) is an area of machine learning concerned with how intelligent agents ought to take actions in an environment in order to maximize the notion of cumulative reward. Reinforcement learning is one of three basic machine learning paradigms, alongside supervised learning and unsupervised learning.

Reinforcement learning differs from supervised learning in not needing labelled input/output pairs to be presented, and in not needing sub-optimal actions to be explicitly corrected. Instead the focus is on finding a balance between exploration (of uncharted territory) and exploitation (of current knowledge).

The environment is typically stated in the form of a Markov decision process (MDP), because many reinforcement learning algorithms for this context use dynamic programming techniques. The main difference between the classical dynamic programming methods and reinforcement learning algorithms is that the latter do not assume knowledge of an exact mathematical model of the MDP and they target large MDPs where exact methods become infeasible.

Decision tree learning is one of the predictive modelling approaches used in statistics, data mining and machine learning. It uses a decision tree (as a predictive model) to go from observations about an item (represented in the branches) to conclusions about the item's target value (represented in the leaves). It is one of the predictive modelling approaches used in statistics, data mining and machine learning. It uses a decision tree (as a predictive model) to go from observations about an item (represented in the branches) to conclusions about the item's target value (represented in the leaves). It is one of the predictive modelling approaches used in statistics, data mining and machine learning. It uses a decision tree (as a predictive model) to go from observations about an item (represented in the branches) to conclusions about the item's target value (represented in the leaves).

Decision tree learning is one of the predictive modelling approaches used in statistics, data mining and machine learning. It uses a decision tree (as a predictive model) to go from observations about an item (represented in the branches) to conclusions about the item's target value (represented in the leaves). It is one of the predictive modelling approaches used in statistics, data mining and machine learning. It uses a decision tree (as a predictive model) to go from observations about an item (represented in the branches) to conclusions about the item's target value (represented in the leaves). It is one of the predictive modelling approaches used in statistics, data mining and machine learning. It uses a decision tree (as a predictive model) to go from observations about an item (represented in the branches) to conclusions about the item's target value (represented in the leaves).

The decision tree learning algorithm is a supervised learning algorithm that can be used for both classification and regression problems. It is a non-parametric supervised learning method used for classification and regression. It is a non-parametric supervised learning method used for classification and regression. It is a non-parametric supervised learning method used for classification and regression. It is a non-parametric supervised learning method used for classification and regression.

Support vector machines (SVMs) are a set of supervised learning methods used for classification, regression and outliers detection.

Genetic Algorithm (GA) is a search heuristic that is inspired by the process of natural selection. It is a optimization technique used to find the best solution to a problem by mimicking the process of natural selection. Here are some notes on Genetic Algorithm with reference to Machine Learning Techniques:

Definition: A Genetic Algorithm is a search heuristic that is inspired by the process of natural selection. It is used to find the best solution to a problem by mimicking the process of natural selection. Genetic Algorithm starts with a population of solutions called chromosomes, and through the process of selection, crossover, and mutation, it evolves the population to find the best solution.

Components: Genetic Algorithm comprises of the following components:

Population: A set of solutions called chromosomes Fitness Function: A function that measures the quality of a solution Selection: A process of selecting the best chromosomes from the population Crossover: A process of combining two chromosomes to create a new one Mutation: A process of introducing small random changes to a chromosome Termination: A stopping criterion to decide when to stop the algorithm GA cycle of reproduction: Genetic Algorithm follows a cyclic process of reproduction where the following steps are repeated to generate new generations:

Selection: The chromosomes are selected based on their fitness values. Crossover: The selected chromosomes are combined to create new offspring. Mutation: The new offspring are then mutated to introduce randomness and diversity in the population. Applications: Genetic Algorithms are widely used in a variety of applications, including optimization of complex functions, scheduling, game playing and trading, image processing, and pattern recognition.

Examples:

A problem of finding the shortest path between two cities can be solved using genetic algorithm. A problem of finding the global minimum of a function can be solved using genetic algorithm. In conclusion, Genetic Algorithm is a powerful optimization technique that is inspired by the process of natural selection. It can be used to find the best solution to a problem by mimicking the process of natural selection. The flexibility of GA makes it a popular choice among the researchers and practitioners for solving various optimization problems.

Overfitting: Overfitting occurs when a model is trained too well on the training data and performs poorly on new, unseen data. This happens when the model is too complex and is able to memorize the training data instead of learning the underlying patterns. Overfitting can be addressed by using techniques such as regularization, early stopping, and ensembling.

Underfitting: Underfitting occurs when a model is not able to capture the underlying patterns in the data and performs poorly on both the training and the test data. This happens when the model is too simple or when the model is not trained for enough number of iterations. Underfitting can be addressed by using more complex models or increasing the number of iterations.

Lack of Data: In some cases, the availability of labeled data can be a limitation. A model may not be able to generalize well to new data if it is trained on a small dataset. This can be addressed by collecting more data, using data augmentation techniques, or using transfer learning.

Data Quality: In some cases, the quality of the data can be a limitation. A model may not be able to generalize well to new data if the data is noisy, inconsistent, or biased. This can be addressed by data cleaning, data preprocessing and data validation.

Bias: Bias refers to the difference between the model's predictions and the true values for certain groups of data. Bias can occur when the training data is not representative of the population or when the model is not designed to handle certain types of data. This can be addressed by using techniques such as oversampling, undersampling, and data augmentation.

Privacy and Security: Machine Learning models may process sensitive data, which can lead to privacy and security issues. For example, a model that is trained on medical data can be used to discriminate against certain groups of people. This can be addressed by using techniques such as differential privacy, homomorphic encryption and federated learning.

In conclusion, issues in Machine Learning can impact the performance of the model and the overall success of the project. It's important to be aware of these issues and to have strategies in place to address them.

In Machine Learning Techniques, a well-defined learning problem is a problem where the input, output, and desired behavior of the model are clearly specified. A well-defined learning problem is essential for the successful implementation of a machine learning model. Here are some notes on well-defined learning problems with reference to Machine Learning Techniques:

Definition: A well-defined learning problem is a problem where the input, output, and desired behavior of the model are clearly specified. This includes the type of input, the type of output, and the performance criteria for the model.

Examples:

A supervised learning problem where the input is an image and the output is a label indicating whether the image contains a dog or a cat. A supervised learning problem where the input is a customer's historical data and the output is a prediction of whether the customer will churn. A unsupervised learning problem where the input is a set of market transactions and the goal is to find patterns or clusters in the data. Mnemonics: To remember the importance of well-defined learning problems, one can use the mnemonic "CLEAR"

C stands for "clearly defined inputs and outputs" L stands for "learning goal is defined" E stands for "evaluation metric is defined" A stands for "algorithms are chosen based on the problem" R stands for "real-world scenario" Real-world Scenario: In real-world scenarios, a well-defined learning problem is crucial for the successful implementation of machine learning models. For example, in the healthcare industry, a well-defined learning problem would be to predict the likelihood of a patient developing a certain disease based on their medical history and test results. The input would be the patient's medical history and test results, the output would be a probability of the patient developing the disease, and the performance criteria would be the accuracy of the predictions. With a well-defined learning problem, appropriate algorithms can be chosen, and the model can be evaluated using the chosen metric.

In conclusion, a well-defined learning problem is essential for the successful implementation of machine learning models. It allows for the clear specification of inputs, outputs, and desired behavior, which in turn enables the selection of appropriate algorithms and the evaluation of model performance.

Linear regression is one of the easiest and most popular Machine Learning algorithms. It is a statistical method that is used for predictive analysis. Linear regression makes predictions for continuous/real or numeric variables such as sales, salary, age, product price, etc.

Linear regression algorithm shows a linear relationship between a dependent (y) and one or more independent (y) variables, hence called as linear regression. Since linear regression shows the linear relationship, which means it finds how the value of the dependent variable is changing according to the value of the independent variable.

The linear regression model provides a sloped straight line representing the relationship between the variables. Consider the below image:

Linear Regression in Machine Learning Mathematically, we can represent a linear regression as:

y= a0+a1x+ ε Here,

Y= Dependent Variable (Target Variable) X= Independent Variable (predictor Variable) a0= intercept of the line (Gives an additional degree of freedom) a1 = Linear regression coefficient (scale factor to each input value). ε = random error

The values for x and y variables are training datasets for Linear Regression model representation.

Types of Linear Regression Linear regression can be further divided into two types of the algorithm:

Simple Linear Regression:

If a single independent variable is used to predict the value of a numerical dependent variable, then such a Linear Regression algorithm is called Simple Linear Regression. Multiple Linear regression: If more than one independent variable is used to predict the value of a numerical dependent variable, then such a Linear Regression algorithm is called Multiple Linear Regression. Linear Regression Line A linear line showing the relationship between the dependent and independent variables is called a regression line. A regression line can show two types of relationship:

Positive Linear Relationship: If the dependent variable increases on the Y-axis and independent variable increases on X-axis, then such a relationship is termed as a Positive linear relationship. Linear Regression in Machine Learning Negative Linear Relationship: If the dependent variable decreases on the Y-axis and independent variable increases on the X-axis, then such a relationship is called a negative linear relationship. Linear Regression in Machine Learning Finding the best fit line: When working with linear regression, our main goal is to find the best fit line that means the error between predicted values and actual values should be minimized. The best fit line will have the least error.

The different values for weights or the coefficient of lines (a0, a1) gives a different line of regression, so we need to calculate the best values for a0 and a1 to find the best fit line, so to calculate this we use cost function.

Cost function- The different values for weights or coefficient of lines (a0, a1) gives the different line of regression, and the cost function is used to estimate the values of the coefficient for the best fit line. Cost function optimizes the regression coefficients or weights. It measures how a linear regression model is performing. We can use the cost function to find the accuracy of the mapping function, which maps the input variable to the output variable. This mapping function is also known as Hypothesis function. For Linear Regression, we use the Mean Squared Error (MSE) cost function, which is the average of squared error occurred between the predicted values and actual values. It can be written as:

For the above linear equation, MSE can be calculated as:

Linear Regression in Machine Learning Where,

N=Total number of observation Yi = Actual value (a1xi+a0)= Predicted value.

Residuals: The distance between the actual value and predicted values is called residual. If the observed points are far from the regression line, then the residual will be high, and so cost function will high. If the scatter points are close to the regression line, then the residual will be small and hence the cost function.

Gradient Descent: Gradient descent is used to minimize the MSE by calculating the gradient of the cost function. A regression model uses gradient descent to update the coefficients of the line by reducing the cost function. It is done by a random selection of values of coefficient and then iteratively update the values to reach the minimum cost function. Model Performance: The Goodness of fit determines how the line of regression fits the set of observations. The process of finding the best model out of various models is called optimization. It can be achieved by below method:

R-squared is a statistical method that determines the goodness of fit. It measures the strength of the relationship between the dependent and independent variables on a scale of 0-100%. The high value of R-square determines the less difference between the predicted values and actual values and hence represents a good model. It is also called a coefficient of determination, or coefficient of multiple determination for multiple regression. It can be calculated from the below formula: Linear Regression in Machine Learning Assumptions of Linear Regression Below are some important assumptions of Linear Regression. These are some formal checks while building a Linear Regression model, which ensures to get the best possible result from the given dataset.

Linear relationship between the features and target: Linear regression assumes the linear relationship between the dependent and independent variables. Small or no multicollinearity between the features: Multicollinearity means high-correlation between the independent variables. Due to multicollinearity, it may difficult to find the true relationship between the predictors and target variables. Or we can say, it is difficult to determine which predictor variable is affecting the target variable and which is not. So, the model assumes either little or no multicollinearity between the features or independent variables. Homoscedasticity Assumption: Homoscedasticity is a situation when the error term is the same for all the values of independent variables. With homoscedasticity, there should be no clear pattern distribution of data in the scatter plot. Normal distribution of error terms: Linear regression assumes that the error term should follow the normal distribution pattern. If error terms are not normally distributed, then confidence intervals will become either too wide or too narrow, which may cause difficulties in finding coefficients. It can be checked using the q-q plot. If the plot shows a straight line without any deviation, which means the error is normally distributed. No autocorrelations: The linear regression model assumes no autocorrelation in error terms. If there will be any correlation in the error term, then it will drastically reduce the accuracy of the model. Autocorrelation usually occurs if there is a dependency between residual errors.

Logistic Regression is a supervised learning algorithm used for classification problems. It is a statistical method that is used to model the relationship between a set of independent variables and a binary dependent variable. Here are some notes on Logistic Regression:

Definition: Logistic Regression is a statistical method that is used to model the relationship between a set of independent variables and a binary dependent variable. The goal is to find the best set of parameters that maximizes the likelihood of the observed data.

Model: Logistic Regression models the probability of the binary outcome as a function of the input features using the logistic function (sigmoid function). The logistic function produces an output between 0 and 1, which can be interpreted as a probability of the binary outcome. The parameters of the model are learned by maximizing the likelihood of the observed data.

Training: The training process of logistic regression is iterative, it starts with an initial set of parameters, then it uses optimization algorithm like gradient descent to iteratively update the parameters to maximize the likelihood of the observed data.

Evaluation Metrics: The performance of logistic regression models can be evaluated using metrics such as accuracy, precision, recall, and F1-score.

Applications: Logistic Regression is widely used in a variety of applications, including image classification, natural language processing, and bioinformatics.

Examples:

A logistic regression model that is used to predict whether an email is spam or not spam, based on the presence of certain keywords in the email. A logistic regression model that is used to predict whether a patient has a certain disease based on their symptoms and test results. In conclusion, Logistic Regression is a widely used algorithm for classification problems. It models the probability of a binary outcome as a function of the input features using the logistic function, and the parameters of the model are learned by maximizing the likelihood of the observed data. It's simple, easy to implement and can provide good results for a large number of problems.

Bayesian Learning is a statistical learning method based on Bayesian statistics and probability theory. It is used to update the beliefs about the state of the world based on new evidence. Here are some notes on Bayesian Learning:

Definition: Bayesian Learning is a statistical learning method based on Bayesian statistics and probability theory. The goal is to update the beliefs about the state of the world based on new evidence.

Bayes Theorem: Bayesian Learning is based on Bayes' theorem, which states that the probability of a hypothesis given some data (P(H|D)) is proportional to the probability of the data given the hypothesis (P(D|H)) multiplied by the prior probability of the hypothesis (P(H)). Bayes' theorem is often written as P(H|D) = P(D|H) * P(H) / P(D)

Prior and Posterior: In Bayesian Learning, a prior probability distribution is specified for the model parameters, which encodes our prior knowledge or beliefs about the parameters. When new data is observed, the prior is updated to form a posterior probability distribution, which encodes our updated beliefs about the parameters given the data.

Conjugate Prior: To make the calculation of the posterior distribution simple and efficient, it is often useful to use a conjugate prior distribution, which is a distribution that is mathematically related to the likelihood function.

Applications: Bayesian Learning is widely used in a variety of applications, including natural language processing, computer vision, and bioinformatics.

Examples:

A Bayesian Learning model that is used to predict whether a patient has a certain disease based on their symptoms and test results. The prior probability distribution encodes our beliefs about the prevalence of the disease in the population, and the likelihood function encodes the probability of observing the symptoms and test results given the disease. A Bayesian Learning model that is used to estimate the parameters of a robot's sensor model, by updating the beliefs about the sensor model based on the robot's sensor readings. In conclusion, Bayesian Learning is a statistical learning method based on Bayesian statistics and probability theory. It is used to update the beliefs about the state of the world based on new evidence. It's powerful and flexible and can be used for a wide range of problems. However, it can be computationally expensive and requires a good understanding of probability theory to implement it correctly.

Bayes' theorem is a fundamental result in probability theory that relates the conditional probability of an event to the prior probability of the event and the likelihood of the event given some other information. Here are some notes on Bayes' theorem:

Definition: Bayes' theorem states that the conditional probability of an event A given that another event B has occurred is proportional to the prior probability of event A and the likelihood of event B given event A. Mathematically, it can be written as: P(A|B) = (P(B|A) * P(A)) / P(B)

Intuition: Bayes' theorem is useful for updating our beliefs about the probability of an event given new information. The prior probability is our initial belief before we see the new information, the likelihood is how likely the new information is given our prior belief, and the posterior probability is our updated belief after we see the new information.

Applications: Bayes' theorem has many applications in machine learning, specifically in the field of Bayesian learning. It can be used for classification problems, where the goal is to find the class label of a given input based on the class probabilities and the likelihood of the input given each class. It can also be used for parameter estimation problems, where the goal is to estimate the values of model parameters given some data.

Example:

A weather forecaster states that there is a 30% chance of rain tomorrow, and you observe that it is cloudy today. According to Bayes' theorem, the probability of rain tomorrow given that it is cloudy today can be calculated as: P(Rain|Cloudy) = (P(Cloudy|Rain) * P(Rain)) / P(Cloudy) Here, P(Rain|Cloudy) is the posterior probability, P(Cloudy|Rain) is the likelihood, P(Rain) is the prior probability and P(Cloudy) is the normalizing constant. In conclusion, Bayes' theorem is a fundamental result in probability theory that relates the conditional probability of an event to the prior probability of the event and the likelihood of the event given some other information. It provides a way to update our beliefs about the probability of an event given new information and has many applications in machine learning, particularly in the field of Bayesian learning.

Bayes theorem is a mathematical formula that describes the probability of an event, based on prior knowledge of conditions that might be related to the event. Here are some notes on Bayes theorem:

Definition: Bayes theorem is a mathematical formula that describes the probability of an event, based on prior knowledge of conditions that might be related to the event. It is named after the Reverend Thomas Bayes, who published a paper on the theorem in 1763.

Bayes Theorem: Bayes theorem states that the probability of an event (A) given some evidence (B) is proportional to the probability of the evidence (B) given the event (A) multiplied by the prior probability of the event (A). Bayes theorem is often written as P(A|B) = P(B|A) * P(A) / P(B)

Concept learning, also known as concept formation, is a process of learning a general rule or concept from a set of examples. It is a type of unsupervised learning where the goal is to identify the underlying structure or pattern in the data without any prior knowledge of the output. Here are some notes on Concept learning:

Definition: Concept learning is a type of unsupervised learning where the goal is to identify the underlying structure or pattern in the data without any prior knowledge of the output. It is a process of learning a general rule or concept from a set of examples.

Inductive Inference: Concept learning is based on the process of inductive inference. Inductive inference is the process of making generalizations from specific examples. In concept learning, the model uses the examples to infer a general rule or concept that explains the relationship between the input and output.

Concept Learning Algorithms: There are several algorithms used for concept learning, including decision trees, rule-based systems, and nearest-neighbor methods.

Decision Trees: Decision trees are a popular algorithm for concept learning. They use a tree structure to represent the possible concepts and make decisions based on the input.

Rule-based Systems: Rule-based systems use a set of if-then rules to represent the concepts. The rules are learned from the examples and can be used to classify new examples.

Nearest-neighbor Methods: Nearest-neighbor methods are based on the idea that similar examples have similar concepts. The model classifies new examples based on the most similar examples in the training set.

Evaluation Metrics: The performance of concept learning models can be evaluated using metrics such as accuracy, precision, recall, and F1-score.

Applications: Concept learning algorithms are widely used in a variety of applications, including natural language processing, computer vision, speech recognition, and bioinformatics.

Limitations: Concept learning algorithms can be limited by the quality and representativeness of the training data, and may not be able to generalize well to new, unseen data. Additionally, the results of concept learning can be hard to interpret, and the discovered concepts may not be meaningful.

In conclusion, Concept learning is a process of unsupervised learning where the goal is to identify the underlying structure or pattern in the data without any prior knowledge of the output. It is based on the process of inductive inference and several algorithms, such as decision trees, rule-based systems, and nearest-neighbor methods are used to implement it. It's used in several applications and has its own set of limitations and challenges.

The Bayes Optimal Classifier (BOC) is a theoretical classifier that makes the best possible predictions based on the probability of the input data. It is a type of Bayesian classifier that is based on Bayes' theorem, which states that the probability of an event occurring is equal to the prior probability of the event multiplied by the likelihood of the event given certain observations. Here are some notes on the Bayes Optimal Classifier:

Definition: The Bayes Optimal Classifier (BOC) is a theoretical classifier that makes the best possible predictions based on the probability of the input data. It is based on Bayes' theorem and is considered the "gold standard" for classification tasks.

Bayes' Theorem: BOC is based on Bayes' theorem, which states that the probability of an event occurring is equal to the prior probability of the event multiplied by the likelihood of the event given certain observations. In the context of classification, the BOC uses the likelihood of the input data given certain class labels and the prior probability of the class labels to make predictions.

Assumptions: The BOC makes the assumption that the data is generated by a probabilistic process and that the class labels and input features are independent. Additionally, it assumes that the probability distributions of the input data are known.

Evaluation Metrics: The BOC is considered to be the best possible classifier in terms of accuracy, as it makes predictions based on the true underlying probability distributions of the data.

Applications: The BOC is mainly used as a theoretical benchmark for comparing the performance of other classifiers.

Limitations: The BOC is not practical to use in in real-world scenarios, as it requires knowledge of the true underlying probability distributions of the data, which is often not available. Additionally, the BOC can be computationally expensive, as it requires the calculation of the likelihood and prior probabilities for all possible class labels for each input.

Real-world Scenario: In real-world scenarios, the BOC is not practical to use. For example, in the healthcare industry, a doctor may not have the knowledge of the true underlying probability distributions of a patient's medical history and test results to make the best possible predictions about their disease. However, the BOC can be used as a benchmark to evaluate the performance of other classifiers, such as logistic regression or decision trees. In conclusion, The Bayes Optimal Classifier (BOC) is a theoretical classifier that makes the best possible predictions based on the probability of the input data. It is based on Bayes' theorem, and it's considered the "gold standard" for classification tasks, but it's not practical to use in real-world scenarios as it requires knowledge of the true underlying probability distributions of the data, which is often not available, and it can be computationally expensive. It is mainly used as a theoretical benchmark for comparing the performance of other classifiers.

Naive Bayes classifier is a probabilistic algorithm based on Bayes theorem for classification tasks. It is a simple and efficient algorithm that makes the naive assumption that all the features are independent from each other. Here are some notes on Naive Bayes classifier:

Definition: Naive Bayes classifier is a probabilistic algorithm based on Bayes theorem for classification tasks. It makes the naive assumption that all the features are independent from each other and uses this assumption to calculate the probability of a class given the input features.

Bayes theorem: Bayes theorem states that the probability of a hypothesis (H) given some evidence (E) is proportional to the probability of the evidence given the hypothesis (P(E|H)) multiplied by the prior probability of the hypothesis (P(H)). In the case of Naive Bayes classifier, the hypothesis is the class, and the evidence is the input features.

Types of Naive Bayes Classifier: There are several types of Naive Bayes classifiers, including Gaussian Naive Bayes, Multinomial Naive Bayes, and Bernoulli Naive Bayes.

Gaussian Naive Bayes: This algorithm assumes that the data is normally distributed and calculates the likelihood of the data given the class using the Gaussian distribution.

Multinomial Naive Bayes: This algorithm is used for discrete data, such as text data. It calculates the likelihood of the data given the class using the multinomial distribution.